Using pocketapi to Understand my Reading Behavior

Table of Contents

I cannot remember anymore when I started using Pocket, but I certainly remember that I realized quickly that it was exactly what I needed. My elaborate collection of browser bookmarks that I wanted to read “some day/maybe”, quickly turned into a massive Pocket queue with articles spanning more than a decade. I don’t know if I’ll ever finish reading it, but at least I feel somewhat in control and I enjoy reading a few articles in bed on a lazy Sunday morning.

Recently, I was able to contribute a few lines of code to a new R package “pocketapi” that allows to interact with the Pocket API from R and that has been recently published on CRAN (Github repository). I joined the project late and the main parts had already been written by others (hat-tip to Frie, Yanik, Sara, Dorian and Christoph), but I could add a few smaller functions and wrote a part of the vignette showcasing the package.

Using the package and analyzing the data in R has helped me to understand a bit better how I read articles on Pocket. Below are a few quick analyses that I have done, also illustrating the basic usage of the package. (Data is from December 5th, 2020. In the code below, I’m skipping the somewhat tedious step of connecting with the Pocket API; this is explained in the package vignette.)

Length of Pocket Queue #

Loading the data from Pocket is relatively straightforward. Note that the “state” argument allows us to specify that we are only interested in unread articles which are not yet archived. At the moment, I have 1145 unread articles in my queue. There are various pieces of information on each article, but especially the URL, the title and the word count seem interesting.

library(pocketapi)

data_unread <- pocket_get(state = "unread")

dim(data_unread) # dimensions of the data frame

## [1] 1145 16

colnames(data_unread) # variable names

## [1] "item_id" "resolved_id" "given_url" "given_title"

## [5] "resolved_title" "favorite" "status" "excerpt"

## [9] "is_article" "has_image" "has_video" "word_count"

## [13] "tags" "authors" "images" "image"Below are a few quick summary statistics of the article length.

library(dplyr)

summary_unread <- summarize(data_unread,

total_words = sum(word_count),

article_count = n(),

mean_words = mean(word_count),

median_words = median(word_count),

quantile_25 = quantile(word_count, probs = 0.25),

quantile_75 = quantile(word_count, probs = 0.75),

quantile_95 = quantile(word_count, probs = 0.95))

t(summary_unread)

## [,1]

## total_words 4174594.000

## article_count 1145.000

## mean_words 3645.934

## median_words 3041.000

## quantile_25 2379.000

## quantile_75 4351.000

## quantile_95 7706.200Overall, the unread articles have a total of 4 174 594 words. As a comparison, all seven books of the Harry Potter series combined have approx. 1.1 million words. (source). So my current queue is more than triple than this! Considering that I keep adding new articles from time to time, my Pocket list will contain reading material for quite a while…

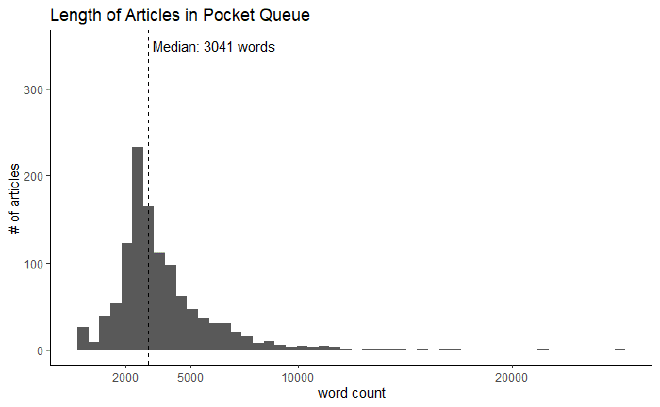

Luckily, this enormous text comes in the form of many small pieces. Assuming a reading speed of approx. 400 words per minute, I will be able to read an average article in about 10min. Below is the distribution of the word counts per article. The peak for articles between 2000 and 5000 words is quite pronounced, but there are indeed some long reads with more than 10k words.

The plot below shows the distribution of the word count per article. There is quite a peak of articles between approx. 2000 and 5000 words. Assuming a reading speed of approx. 400 words per minute, this would correspond to a reading time of roughly 5-12 minutes.

library(ggplot2)

ggplot(data = data_unread, aes(x = word_count)) +

geom_histogram(bins = 50) +

geom_vline(aes(xintercept = median(word_count)), linetype = "dashed") +

scale_x_continuous(breaks = c(2000, 5000, 10000, 20000)) +

labs(title = "Length of Articles in Pocket Queue",

x = "word count", y = "# of articles") +

annotate(geom = "text", x = summary_unread$median_words + 200, y = 350,

label = "Median: 3041 words", hjust = "left") +

theme_classic()

Most Popular Websites #

As a next step, I want to understand not only how long I am reading, but also what I am reading. I follow a couple of newspaper accounts on Twitter and often use them to populate my Pocket queue, but I often also save the occasional link that I happen to stumble upon. The code snippet below isolates the website from the URL based on the pattern of forward slahes. The top10 sources show three basic dimensions:

- national German news magazines (Die Zeit, leading with considerable distance) and brandeins/b1 as a German business magazine that often covers somewhat unusual stories and is quite different from the standard business news

- various English news websites (NYT, The Guardian, The New Yorker, The Attlantic)

- some aggregators (Medium, articles saved via Pocket’s own website, bit.ly in the widest possible sense). Together, those top10 sources represent approx. half of the queue.

library(stringr)

# extracting the domain from the URL

data_unread$domain <- str_extract(string = data_unread$given_url,

pattern = "[/]{2}[^/]+")

data_unread$domain <- str_replace_all(string = data_unread$domain,

pattern = "[/]{2}", replacement = "")

data_unread$domain <- str_replace_all(string = data_unread$domain,

pattern = "w{3}(\\.){1}", replacement = "")

# aggregating on domain level

domain_count <- data_unread %>%

count(domain) %>%

group_by(domain) %>%

arrange(desc(n))

# adding percentage and cumulative percentages

domain_count$percent <- round(domain_count$n / sum(domain_count$n) * 100, digits = 3)

domain_count$cum_percent <- cumsum(domain_count$percent)

domain_count[1:10,]

## # A tibble: 10 x 4

## # Groups: domain [10]

## domain n percent cum_percent

## <chr> <int> <dbl> <dbl>

## 1 zeit.de 218 19.0 19.0

## 2 nytimes.com 59 5.15 24.2

## 3 medium.com 58 5.07 29.3

## 4 getpocket.com 54 4.72 34.0

## 5 newyorker.com 51 4.45 38.4

## 6 theguardian.com 50 4.37 42.8

## 7 b1.de 28 2.44 45.2

## 8 bit.ly 26 2.27 47.5

## 9 brandeins.de 24 2.10 49.6

## 10 theatlantic.com 19 1.66 51.3Favorited Articles #

Pocket allows users to mark articles as favorites, making it easier to find them in the archive. I use this function from time to time, but I don’t know how often. Looking into my archive, I can see that only 25 articles (of the last 5000) are favorited, which corresponds to a percentage of 0.5%.

data_read <- povcket_get(state = "archive")

table(data_read$favorite)

##

## FALSE TRUE

## 4975 25Pocket limits the API call to the last 5000 items in the archive, while there in theory could be more. Using a little trick in the pocket_get() function allows us to obtain only the favorited articles from the archive.

data_favorited <- pocket_get(favorite = TRUE, state = "archive")

nrow(data_favorited)

## [1] 367This allows us to see that there are in total 367 articles, but only 25 of them were also included in the recent archive.

I like to think of myself as somebody who likes to read longer articles, but I’m not sure if this also shows in the data. A t-test shows that actually this self-image seems to translate into the data: Favorited articles are about 600 words longer (sig. difference with p<0.01)

t.test(x = data_favorited$word_count, y = data_read$word_count[!data_read$favorite])

##

## Welch Two Sample t-test

##

## data: data_favorited$word_count and data_read$word_count[!data_read$favorite]

## t = 7.3947, df = 395.64, p-value = 8.535e-13

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## 432.7141 746.1215

## sample estimates:

## mean of x mean of y

## 1851.046 1261.629I have learnt quite a bit about my own reading behavior from these few small analyses. Using the pocketapi package allows to interact with the API quite easily and e.g. allows to re-build add-on applications like Read Ruler with a few lines of R code. If you’re using both Pocket and R, this might be a package for you!